In a new update, the iPhone 16 lineup is finally getting Visual Intelligence, a feature that lets you instantly gather information about anything around you just by snapping a picture.

Integrated into the Camera Control button on iOS 18.2, currently in beta, Visual Intelligence gives you access to third-party services like ChatGPT and Google Search for insights, making it simple to learn about objects, landmarks, restaurant ratings, and even the breed of a dog you encounter.

Below, we'll walk you through what you need to get started with Visual Intelligence, how to access its powerful tools, and practical examples of what you can do with this groundbreaking feature.

Getting Started with Visual Intelligence on iPhone 16

To use Visual Intelligence, you'll need an iPhone 16, iPhone 16 Plus, iPhone 16 Pro, or iPhone 16 Pro Max running iOS 18.2 or later. In addition, Apple Intelligence features must be enabled via Settings » Apple Intelligence & Siri.

Visual Intelligence is built directly into the Camera Control button, meaning you can access it from anywhere you can access the camera interface.

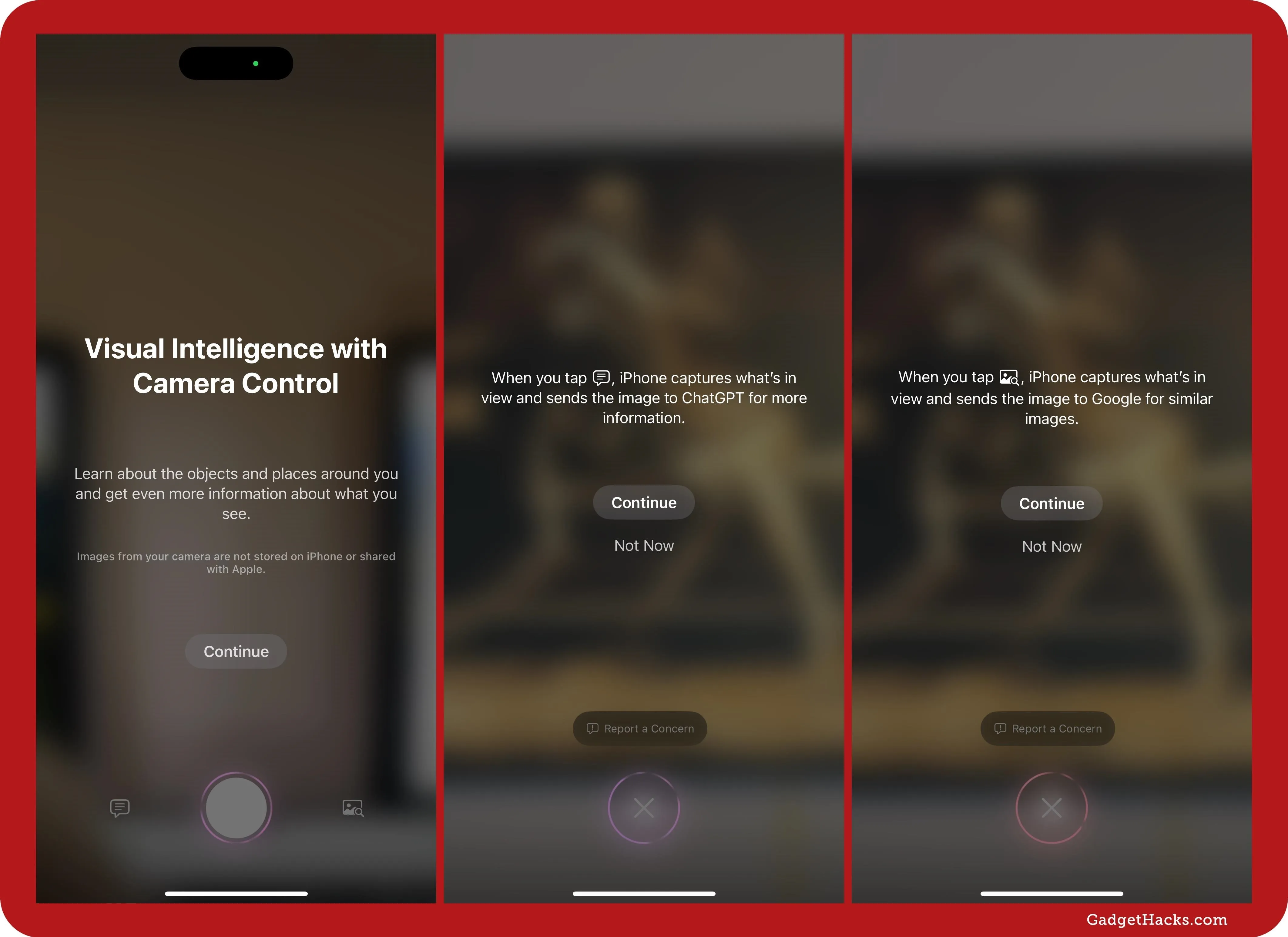

To use it, press and hold the Camera Control button. If it's your first time using Visual Intelligence, Apple will display a splash screen explaining that any images captured for analysis aren't stored on your iPhone or shared with Apple. You'll also get splash screens when first using the Ask and Search features for ChatGPT and Google, respectively, which explains that captured images are sent to ChatGPT or Google for analysis.

Using the Visual Intelligence Interface

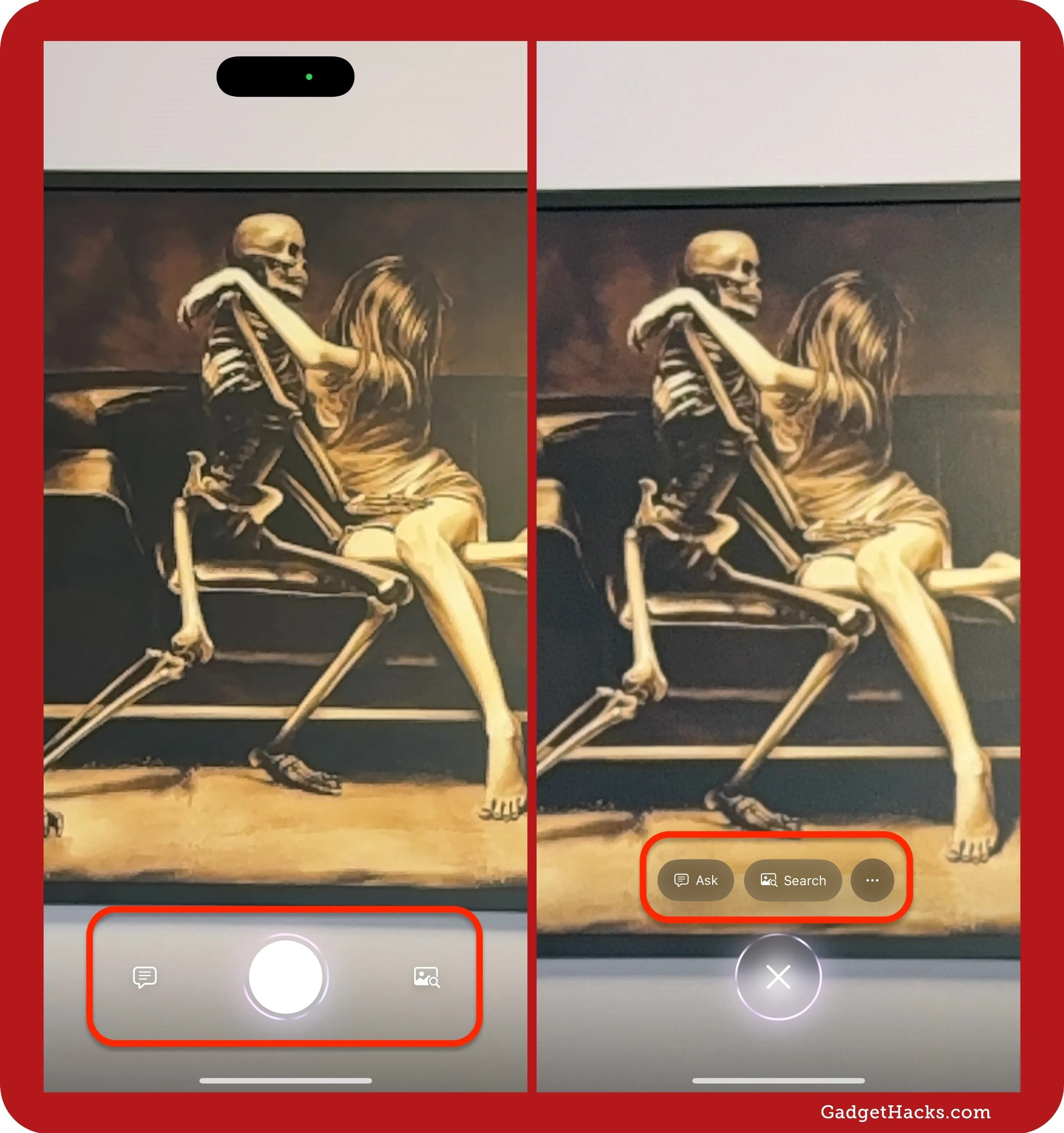

Once you're in the Visual Intelligence interface, you'll see three main components: a large shutter button (Capture), a text bubble icon (Ask), and an image search icon (Search). All three will take a picture of whatever you're pointing your iPhone's camera at, but each has its own purpose, which we'll review below. The Ask and Search buttons will also appear if the Capture button doesn't give you any information.

You can zoom in or out by pinching the screen or lightly pressing the Camera Control button. Zoom is the only option available in the Camera Control settings overlay, so you'll only ever have to press the button once lightly and then slide your finger on it to adjust the zoom level.

1. Capture (Shutter)

Tap the shutter button to capture the scene and get custom results from Apple Intelligence. The results will vary depending on what you captured. Here are some of the things you might see from Apple Intelligence:

- Translate text

- Summarize text

- Add an event to Calendar

- Call a phone number

- Visit a website

- View a location in Maps

- Read text aloud

- Order food

- View a menu

- View more options

If Apple Intelligence can't find anything in the image, you can still use the Ask and Search buttons to get information from ChatGPT and Google, respectively, which we'll cover below.

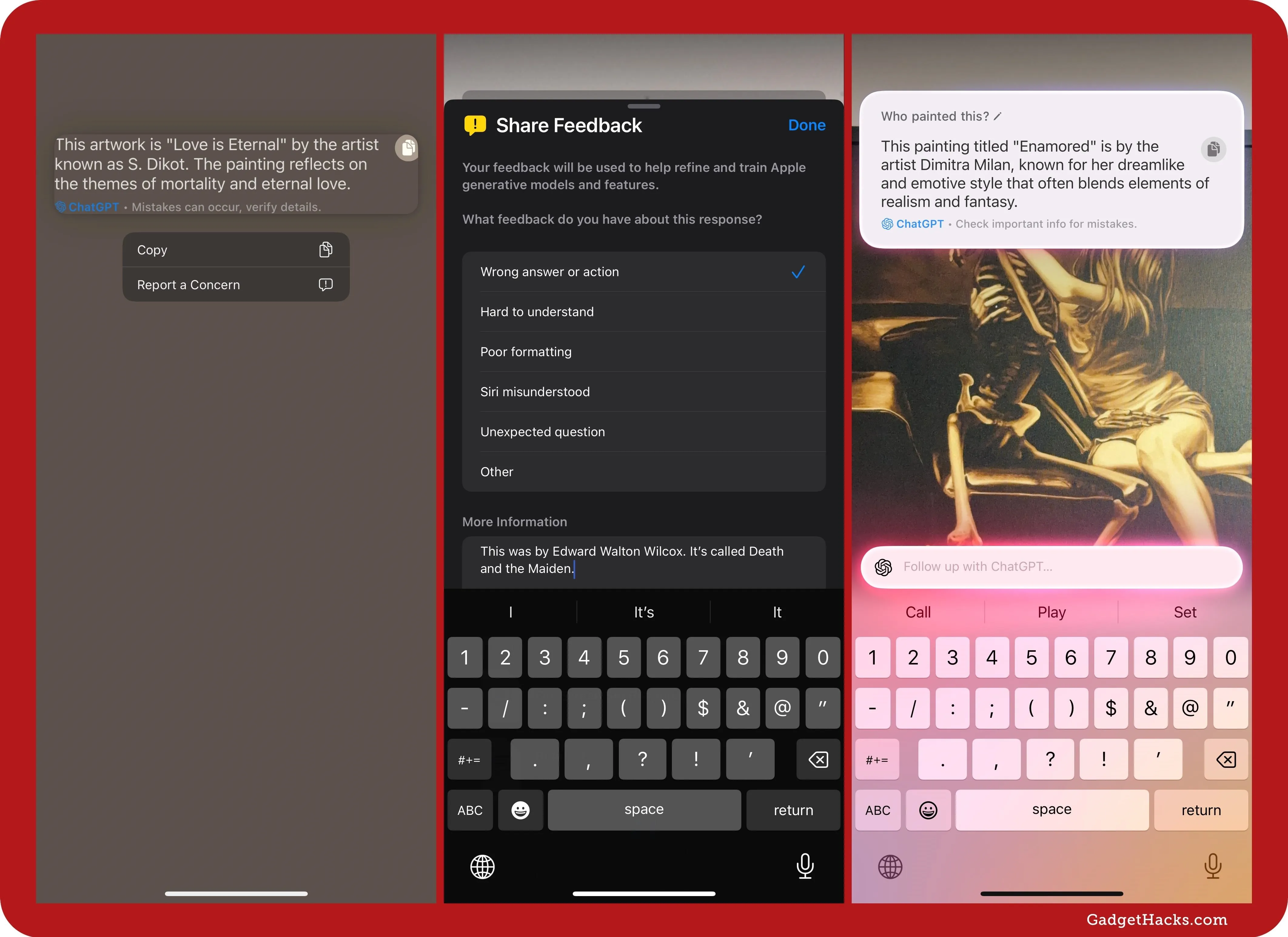

If you're getting incorrect results from Visual Intelligence, you can "Report a Concern" if you see the button appear. It will also be available in the More (•••) menu.

2. Ask (ChatGPT)

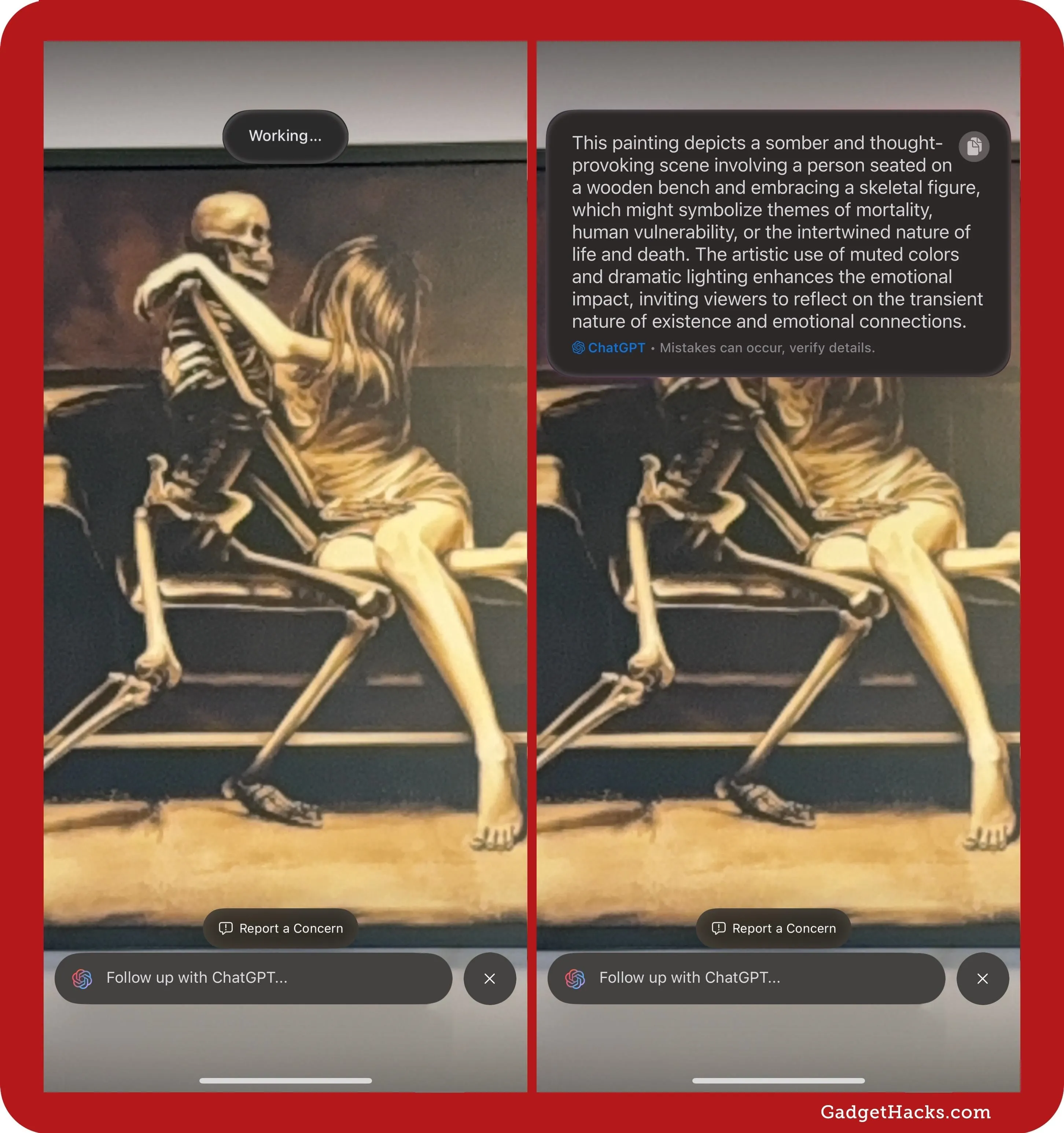

Tap the text bubble icon to get detailed information from ChatGPT, which helps interpret complex scenes and identify hard-to-recognize objects. It also serves as a good backup option when Apple Intelligence itself doesn't have any answers for you.

You can tap the Ask button from the capture screen to immediately take a picture and ask ChatGPT for information about it, or you can tap Ask after capturing an image with the shutter button, in case you want to hear Apple's side of things first.

You'll then see ChatGPT's response, which you can copy to your clipboard, and a "Follow up with ChatGPT" option to continue asking questions for additional details. If you don't get an automatic response from ChatGPT, simply submit a question in the follow-up field.

Tip: If you have the ChatGPT app installed, you can open your interaction in-app for a more conversational experience. The ChatGPT integration also saves interactions in your ChatGPT account when logged in.

Note that ChatGPT isn't perfect. As seen below, I wanted to know the artist behind a painting captured, and it incorrectly identified the artist and artwork's title. I submitted the correct answer in a feedback form, then tried again over three weeks later and got a different response that was also dead wrong.

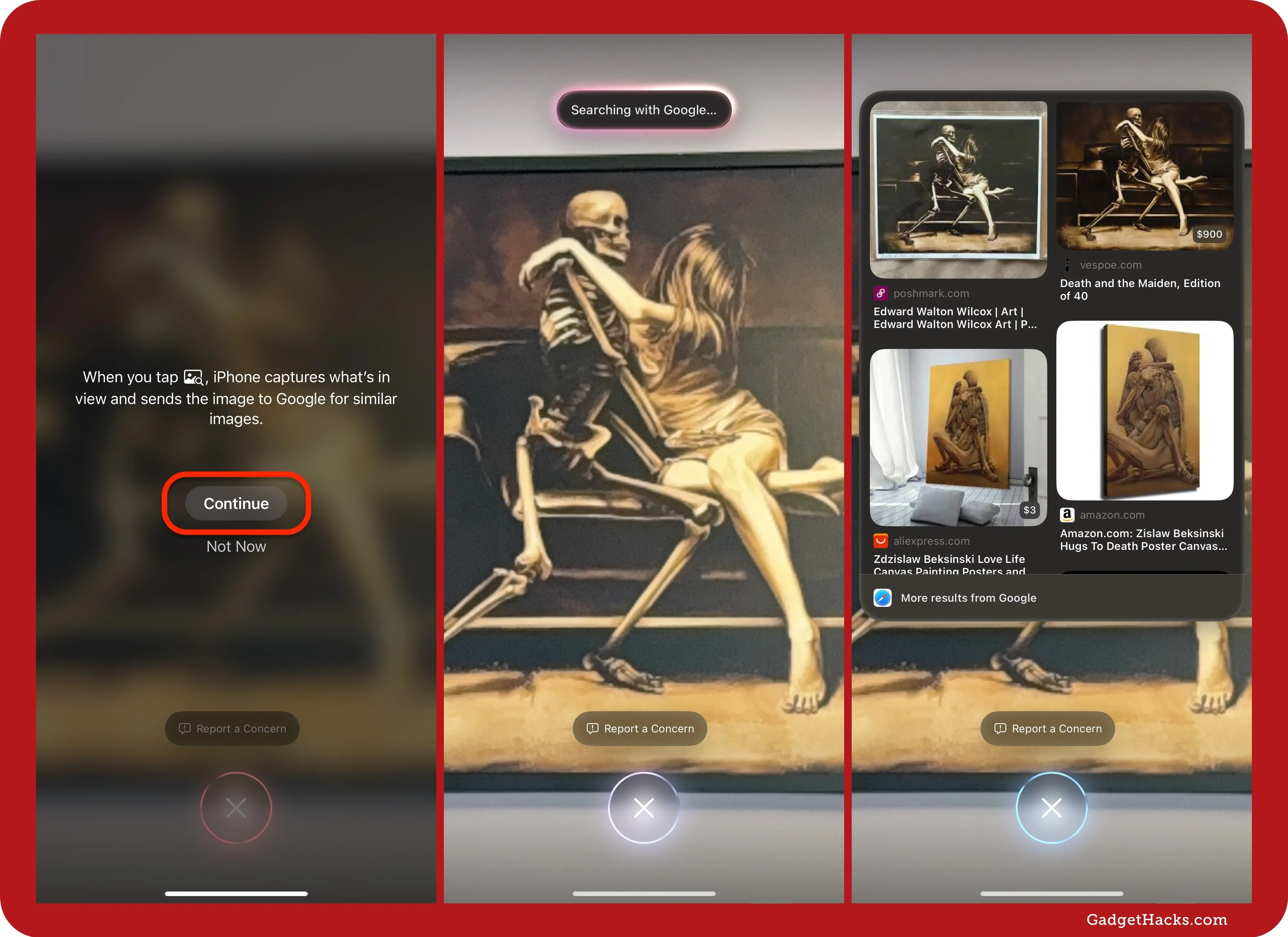

3. Search (Google)

Tap the image search icon to see matching images found on Google Search, which helps identify a specific product, place, or other visible elements. Like the Ask button, the Search option serves as a good backup option when Apple Intelligence itself doesn't have any answers for you.

You can tap the Search button from the capture screen to immediately take a picture and ask Google Search to show similar images. Alternatively, you can tap Search after capturing a photo with the shutter button to see Apple's own results first.

You'll then see a window showing a selection of images pulled from Google Image Search, which you can scroll through or open in a web view to explore in detail.

If you're getting incorrect image matches from Google, you can "Report a Concern" to provide feedback.

Practical Examples of Visual Intelligence in Action

Here are some real-world scenarios where Visual Intelligence can come in handy:

- Exploring local eateries: Point your camera at a restaurant while walking down the street. Visual Intelligence can bring up customer ratings, reviews, menus, order links, and even hours of operation.

- Translating text on the go: When traveling, you can use Visual Intelligence to translate signs, menus, or other text from one language to another.

- Adding event details instantly: Snap a photo of an event flyer, and Visual Intelligence will allow you to create a calendar event from the details it captures.

- Learning about plants, animals, and objects: Take a photo of a unique plant or animal, and Visual Intelligence can use either ChatGPT or Google to help you identify it.

- Identifying artworks and paintings: If you're viewing a piece of art in a museum, hold the Camera Control button, tap Ask, and Visual Intelligence will use ChatGPT to identify the artist and piece (though, as seen above, the results may not be perfect every time).

- Getting practical answers: Need help identifying a dog's breed or the type of tree in a park? Visual Intelligence's ChatGPT can analyze the image and provide insight.

- Finding where to buy products: If you spot an item you like in a shop, take a quick photo with Visual Intelligence and use Google Search to find online retailers.

- Identifying famous landmarks: Snap a photo of a landmark, and Visual Intelligence can show you its location on the map, address, reviews, and other data, and using Google Search will show similar images and details.

- Helping with accessibility: Capture an image to get a translation, summarize content for easier reading, make it speak captured text to you, and more.

Tips for Using Visual Intelligence Successfully

- According to Apple, "information about places of interest will be available in the U.S. to start, with support for additional regions in the months to come."

- Visual Intelligence is automatically available on supported devices but may require permission to access ChatGPT or Google Search. Ensure your settings are configured for a seamless experience.

- When using ChatGPT, feel free to ask follow-up questions if the initial response is incomplete or unclear.

- Visual Intelligence doesn't store or share the captured images with Apple.

- If you receive inaccurate information, submit feedback to Apple, Google, or ChatGPT directly in the interface to help train their models.

Visual Intelligence on the iPhone 16 lineup brings users a new level of information access and flexibility. Whether you're exploring your surroundings, seeking product info, or identifying objects, this tool opens up new possibilities for real-world interaction and digital intelligence on the go. By leveraging services like ChatGPT and Google Search, Apple's Visual Intelligence offers a powerful combination of privacy, control, and immediate insight — all in the palm of your hand.

Cover photo and screenshots by Gadget Hacks

Comments

No Comments Exist

Be the first, drop a comment!